I’m sure by now you’ve heard enough about the adoption of AI by your favorite cybersecurity vendors. You may have also attended presentations or conference sessions about using AI to improve the speed and accuracy of detecting threats. But what about the offensive side of AI? Surely, the bad guys are thinking of ways of exploiting it, aren’t they?

In this blog, I will present some examples of AI techniques that can be utilized for offensive attacks—and some already are.

AI, Specifically Machine Learning, is Driven by Data, and There’s a Lot Out There!

Thanks to several significant data breaches, an immeasurable amount of our personal information is on the dark web, available for free or a small amount of cryptocurrency. The recent US-based National Public Data breach has added at least another billion records. This is gold for bad actors who are savvy at using machine learning to sift through the data, create social graphs of people, and craft sophisticated, more convincing phishing campaigns than ever.

These bad actors also have another powerful AI tool: generative AI. These systems help them eliminate language barriers, allowing non-native speakers to generate emails and deep fake voice messages, photos, and videos that deceive even the most vigilant individuals. The typical red flags—like awkward grammar or misspellings—that used to give away phishing attempts are now becoming a thing of the past.

Here’s an example of a phishing email I generated using ChatGPT in seconds.

Generative AI creates a polished email; all the attacker has to do is fill in names from mining breach data to create highly targeted spear-phishing campaigns.

You may have seen the Peele-Obama video about six years ago. It seemed convincing and could have fooled anyone. In 2018, it took over 56 hours to create with the assistance of a video effects professional, but today, we are close to a point where an average hacker could do it in a few hours from their basement.

Source: The Verge and Youtube

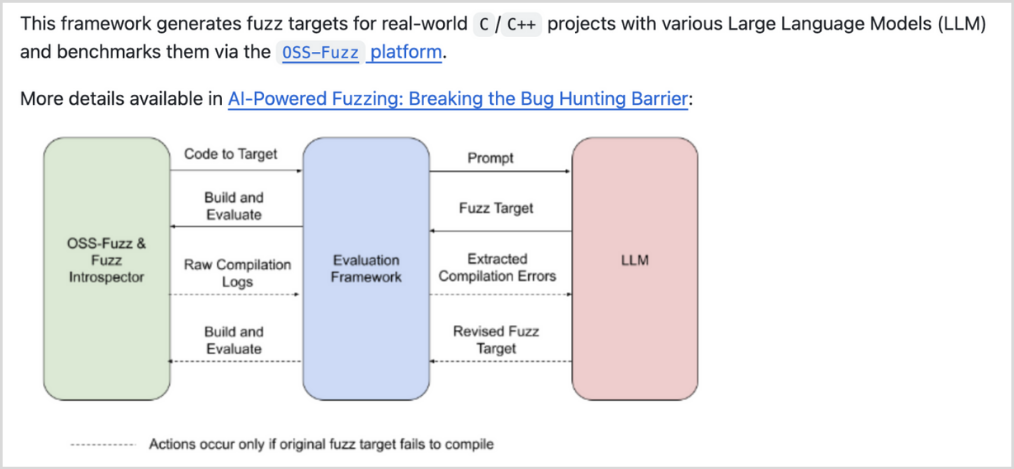

AI’s role in offensive cybersecurity is not limited to social engineering. It can also be used to find vulnerabilities. Let’s consider fuzzing. Fuzzing is a software testing technique that provides invalid, unexpected, or random data as inputs to a program and looks to see how it behaves. Bugs or vulnerabilities in the code may result in crashes, provide unauthenticated access, or other behaviors a malicious user could exploit. In 2023, researchers at Google published a blog on AI-powered fuzzing that presented their findings on using large language models (LLMs) to boost the performance of their open-source fuzzing framework. Google has since open-sourced this project for the benefit of the community to find problems and fix them before adversaries exploit them.

A Framework for Fuzz Target Generation and Evaluation

Source: https://github.com/google/oss-fuzz-gen

This is a significant contribution because it enables enterprises to level the playing field against bad actors who may also be using the same or similar frameworks to find software vulnerabilities.

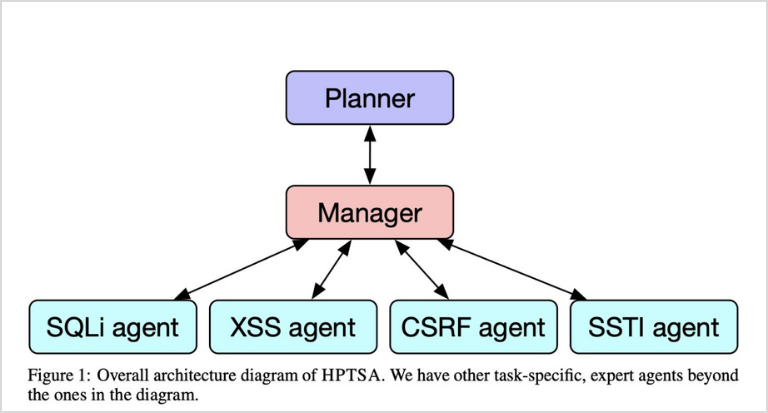

We are increasingly using AI-based agents to help with daily tasks and boost productivity in the office (when the CISO is not watching, of course!). But what if bad actors used AI agents to find and exploit vulnerabilities? This is precisely what a team at the University of Illinois Urbana-Champaign researched with considerable success. They developed a set of task-specific agents and an orchestrator to probe the target system and dispatch appropriate agents to identify and exploit vulnerabilities.

Source: Teams of LLM Agents can Exploit Zero-Day Vulnerabilities

LLMs and GPTs aren’t the only AI technologies that lend themselves to offensive cybersecurity. The real world is a dynamic environment with inherent randomness, and adversaries must constantly evaluate and adapt to it. Reinforcement Learning (RL) is a perfect fit for navigating the environment to achieve a goal. The Pwnagotchi is a do-it-yourself Raspberry Pi Zero-based device that you can use to collect WPA key material from any WiFi access point in its vicinity.

Source: Pwnagotchi: Deep Reinforcement Learning for WiFi pwning!

We’ve looked at a few examples of bad actors can abuse AI technologies. An obvious question is how we respond. Can AI be used to fight AI? We’ve just seen how Google has helped the community by releasing an AI-based fuzzing framework to help get ahead of the curve. Many researchers and organizations are also developing AI-based deep fake detectors, and reinforcement learning can be applied to early detection of AI-based attacks.

However, we should recognize that offensive AI vs. defensive AI will not result in a stalemate. There will be wins on both sides. Unfortunately for businesses, even a single loss will result in a breach and, if uncontained, will cause catastrophic damage. Adopting a Zero-Trust strategy becomes even more critical as it helps contain the breach and make the business “breach-ready.”

Microsegmentation: The Core of Breach Readiness

A zero-trust strategy typically encompasses users, devices, networks, applications, and data. Microsegmentation is a critical capability that implements least privilege access from users and devices to applications and data while limiting lateral movement within the network. This significantly reduces the blast radius of a system that an AI-based attack may compromise and reduces the attack surface for neighboring systems.

For more details on how ColorTokens can enable scalable microsegmentation and deliver visible results within 90 days, please contact us.